Strengthening Cybersecurity of Frontier AI Model Companies and Their Supply Chains via DoD Procurement

If the Department of Defense makes strong cybersecurity a prerequisite for AI-related contracts, it could drive widespread adoption of best practices across frontier model companies.

Summary

Export controls on compute and model weights raise the stakes—making it more likely that adversaries will try to steal them instead.

Robust cybersecurity at frontier AI companies and across their supply chains is now a critical national security concern.

Even with strong internal defenses, weak links among vendors can expose frontier model providers to sophisticated attacks.

The DoD can help raise the cybersecurity bar by tying contract eligibility to high standards—creating market-wide incentives to improve.

Strengthening Frontier AI Cybersecurity Procurement

The United States’ strategy for outcompeting China on artificial intelligence (AI), motivated in large part by national security concerns, currently includes export controls on compute and, more recently, on model weights. This strategy aims to curb adversarial development and secure the United States’ lead, but it also increases the incentive for adversaries—prevented from building frontier models themselves—to attempt to steal AI model weights. This article argues that the success of these export controls crucially depends on strengthened cybersecurity measures at frontier model companies and throughout their supply chains.

In Securing AI Model Weights: Preventing Theft and Misuse of Frontier Models, Nevo et al. recommend that frontier model providers should, for example:

Harden interfaces for model access against weight exfiltration;

Implement insider threat programs;

Invest in defense-in-depth (multiple layers of security controls that provide redundancy in case some controls fail);

Engage advanced third-party red teaming that reasonably simulates relevant threat actors;

Incorporate confidential computing to secure the weights during use and reduce the attack surface;

Secure the supply chain to prevent hardware and software vulnerabilities from being introduced preemptively.

In particular, the Nevo et al. report highlights supply chain vulnerabilities as a major risk factor for AI model theft. Even if frontier model developers implement strong internal security, gaps in their suppliers’ software or infrastructure can be exploited. Achieving the level of security needed to thwart attacks from well-resourced state actors would require fully internalizing the supply chain—an approach that is currently unfeasible and incompatible with existing development and deployment practices.

However, while frontier AI model weights are not yet formally recognized as national security assets, proactive measures should be taken to prepare the broader ecosystem, which is currently sorely lacking in cybersecurity infrastructure—particularly with regard to AI data centers.

In the United States, general-purpose frontier models are developed by private-sector companies, many of which are not affiliated with the Department of Defense (DoD) and may have no intention of doing so. Yet, as these models grow more capable, their weights may nevertheless acquire national security significance, regardless of the developers’ intentions. Certain strategic but risky capabilities—such as autonomous hacking—may emerge as a byproduct of scale rather than through targeted development aimed at national defense or military application. This creates a growing risk that highly sensitive systems are being built outside the scope of national security infrastructure, without a mechanism to compel stringent cybersecurity protections.

Implementing these cybersecurity protections to prevent adversaries from stealing model weights may soon become a national security priority. I propose that the most promising yet underexplored mechanism available to the U.S. government is to leverage DoD public procurement regulations. There is already a strong regulatory basis, including enforcement mechanisms, which can be expanded for this purpose. The incentives for frontier AI model companies to comply are also high, given the scale of the DoD budget.

The role of public procurement and public-private partnerships in securing model weights

Federal public procurement—the process through which governments purchase goods and services—is governed by a complex regulatory framework designed to ensure transparency, competition, and value for taxpayer money. The Federal Acquisition Regulation (FAR) is the primary legal framework that comprehensively governs the entire process, with the Defense Federal Acquisition Regulation Supplement (DFARS) adding further requirements applicable to DoD contracting activities.

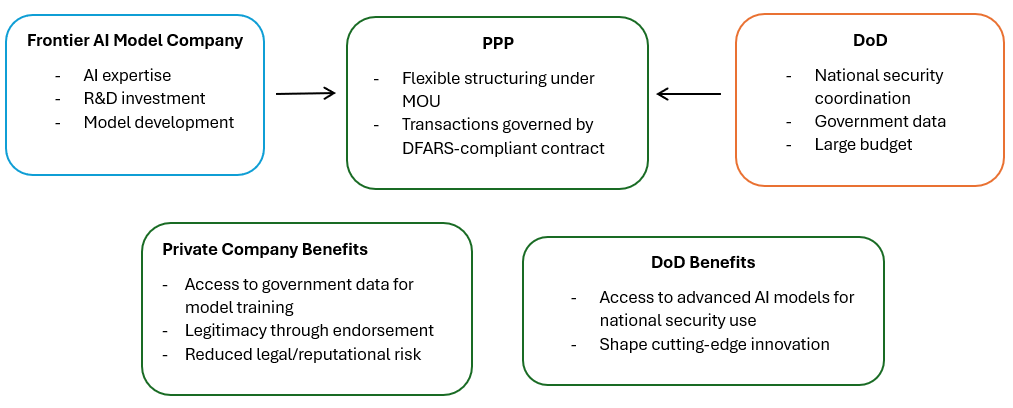

Public-private partnerships (PPPs), on the other hand, are arrangements between government entities and private-sector companies in which they collaborate to finance, build, and operate public infrastructure or deliver public services over an extended period. These partnerships involve sharing resources, risks, responsibilities, and rewards. In the United States, PPPs are often structured through a non-binding memorandum of understanding (MOU). However, when financial transactions occur, formal contracts must still comply with federal procurement laws such as the FAR. While no federal law comprehensively governs PPPs across the U.S. government, the DoD has PPP-specific regulations in place.

As AI models increasingly achieve capabilities with national security implications, PPPs are becoming a strategic necessity for the government. The DoD relies on private-sector AI expertise, innovation capacity, and privately funded development, while companies benefit from government data, national security coordination, and potentially reduced legal and reputational risk due to shared responsibility frameworks and the perceived legitimacy conferred by government endorsement. The large budgets for defense and national security contracts—which increasingly incorporate key AI components—along with the rising trend of the military partnering with frontier AI companies and testing their models, underscore the potential of PPPs to ensure the secure and effective deployment of AI.

Public procurement alone is ill-suited for such complex, long-term projects. However, the regulatory framework introduced through procurement whenever financial transactions occur crucially provides the mechanisms needed to reliably compel cybersecurity measures from private companies.

Compulsory public-private collaboration in national security

The U.S. government holds statutory authority under the Defense Production Act (DPA) to mandate private-sector participation in PPPs when necessary for national defense applications. For example, in March 2020, during the early stages of the COVID-19 pandemic, President Trump invoked the DPA to designate ventilators and personal protective equipment as essential to national defense, compelling private manufacturers to shift production toward public health needs. This use of the DPA demonstrates the government’s capacity to rapidly mobilize private-sector capabilities in response to an emergent threat by coordinating resources, directing production, and ensuring public access to essential technologies.

This power could be invoked to compel frontier AI model companies to make their models available to the DoD if national security-relevant capabilities are developed. The DPA grants the president the authority to issue regulations and orders to compel industry cooperation in support of national defense objectives and to obtain information from companies working in AI and other sensitive areas, thereby ensuring government oversight of critical technological advancements. In this way, the president could direct the development of frontier AI systems to optimize them for national security applications.

The importance of the DoD’s cybersecurity requirements

A frontier model cybersecurity standard that begins in DoD procurement could lay the foundation for broader adoption by all relevant frontier AI companies. We saw such proliferation with the Cybersecurity Maturity Model Certification (CMCC), which introduced a procurement-linked approach to cybersecurity certification within the DoD and helped solidify the voluntary NIST SP 800-171 standard as a broader benchmark for federal contractors. A broader scope of cybersecurity requirements beyond the DoD will likely become necessary at some point, as not all relevant frontier AI model companies will necessarily contract with the DoD.

Aside from previous success in proliferating cybersecurity standards, making more stringent cybersecurity requirements part of DoD procurement regulations is a good place to start for two reasons. First, DoD procurement is a powerful lever because defense contracts are highly sought after due to their substantial monetary value. As AI models with advanced capabilities become increasingly integral to national defense and security, we can expect frontier model providers to expand their current efforts to partner with the DoD, either directly or through more established military contractors.

Second, DoD procurement offers built-in enforcement mechanisms: the governing contracts already include oversight mechanisms and audit rights that ensure adherence to stringent security standards. Public procurement, with its competitive bidding processes, could drive a “race to security,” encouraging AI developers to prioritize stringent security measures in order to win contracts. In addition, recent enforcement efforts against DoD contractors under the False Claims Act could be expanded and leveraged to compel compliance. More on that in the following section.

Utilizing existing cybersecurity requirements in public procurement to protect model weights

Federal cybersecurity requirements for contractors to date focus on protecting government information and critical infrastructure rather than securing private intellectual property (IP), such as model weights. Therefore, changes are needed. The status quo is exemplified in the FAR, DFARS, and the Federal Information Security Modernization Act (FISMA). Compliance programs like the Federal Risk and Authorization Management Program (FedRAMP) and CMMC share the same limited scope. FedRAMP standardizes cloud security compliance for federal contractors, ensuring that cloud service providers meet stringent security benchmarks before being procured by agencies. CMMC, applicable to defense contractors, enforces a tiered cybersecurity certification model based on NIST SP 800-171. Both currently aim only to secure government information held by the defense industrial base, not sensitive information possessed by their private partners.

The Civil Cyber-Fraud Initiative is an aggressively pursued enforcement avenue that gives teeth to these cybersecurity obligations. This Department of Justice (DOJ) initiative penalizes misrepresentations by government contractors regarding the cybersecurity measures they have implemented. Its scope could easily be expanded to enforce cybersecurity requirements designed to protect AI model weights. Demonstrating the initiative’s effectiveness, the DOJ increasingly uses the False Claims Act (FCA) to pursue contractors who misrepresent their compliance with federal cybersecurity requirements. In early 2025, the DOJ reached an $11.3 million settlement with DoD contractor TRICARE over years of alleged false cybersecurity certifications, and a $1.25 million settlement with Penn State for failing to implement required controls under DoD and NASA contracts. Notably, both cases were initiated by company insiders under the FCA’s whistleblower provisions, highlighting how internal personnel can surface cybersecurity noncompliance even in the absence of an actual breach.

As stated above, cybersecurity measures must be in place not only at frontier AI model companies themselves but also throughout their entire supply chains. Recent progress on this front is encouraging. Under the Biden Administration, awareness of supply chain vulnerabilities grew significantly. Executive Orders (EOs) 14028 (2021) and 14144 (2025) emphasize securing the software supply chain. They require contractors to document their supply chains and verifiably attest to secure development practices and vulnerability patching. The EOs also propose contractual updates to FAR that would enforce security obligations. This shift stems from a recognition that cyber adversaries often exploit weaknesses in third-party vendors rather than directly targeting government systems. The M-22-18 Memorandum further reinforces this by mandating that agencies procure software only from vendors compliant with NIST’s Secure Software Development Framework (SSDF).

Additional evidence of this growing awareness appears in the 2024 and 2025 National Defense Authorization Acts (NDAAs), which impose stricter controls on technology acquisitions—particularly regarding foreign adversary influence in communication, surveillance, and semiconductor supply chains. The 2024 NDAA, for example, introduced provisions requiring increased supply chain visibility and rigorous testing, banning the acquisition of Chinese communication and surveillance technologies, and mandating that contractors certify their chip designs, attesting to the fact that they have analyzed and addressed potential points of unauthorized access and manipulation.

The National Security Memorandum issued in October 2024 sharpened this focus by requiring relevant departments to “identify critical nodes in the AI supply chain, and develop a list of the most plausible avenues through which these nodes could be disrupted or compromised by foreign actors. On an ongoing basis, these agencies shall take all steps, as appropriate and consistent with applicable law, to reduce such risks.”

Significantly, the same National Security Memorandum expands the traditional scope of cybersecurity—from protecting government information held by private companies to also protecting intellectual property, such as AI model weights. It states:

It is the policy of the United States Government to protect United States industry, civil society, and academic AI intellectual property and related infrastructure from foreign intelligence threats to maintain a lead in foundational capabilities and, as necessary, to provide appropriate Government assistance to relevant nongovernment entities.

Under the Defense Production Act, this Memorandum carries the force of law. However, its future under the Trump administration remains uncertain.

President Trump’s April 2025 memoranda, M-25-21 and M-25-22, which provide guidance for AI use and acquisition in government, explicitly rescind two of Biden’s procurement-focused memoranda—but not M-22-18 or the National Security Memorandum. While these Trump-era memoranda clearly shift toward reducing red tape in procurement, both explicitly carve out AI used as part of a National Security System, preserving room for narrowly tailored, security-driven requirements. Indeed, we can find evidence for a continuing awareness of the importance of securing the supply chain: Both memoranda give preference to “American-made AI,” citing national security considerations. A procurement-focused strategy targeting frontier model cybersecurity within the DoD therefore fits cleanly within that carveout and advances core national security objectives without expanding regulatory scope elsewhere.

Regulatory measures taken during the Biden administration indicated a clear evolution in procurement security policy: a shift from protecting internal government data to securing complex supply chains, including initial steps toward safeguarding intellectual property such as AI model weights. While recent shifts in federal guidance signal a reassessment of where and how security requirements are applied, the imperative to protect critical technologies remains unchanged. In this context, focusing cybersecurity efforts through the lens of defense procurement offers a focused, high-impact strategy consistent with enduring national security priorities.

A way forward

As indicated earlier, to secure the United States’ AI lead over China, the current administration is primarily leveraging export restrictions—an essential step in limiting access to advanced capabilities. However, these efforts must be complemented by equally robust cybersecurity requirements. Specifically, the same technical thresholds used to trigger export restrictions on compute and model weights should also determine which frontier AI models are subject to heightened cybersecurity standards in the DoD procurement process. First introduced under the Export Control Reform Act of 2018 and significantly tightened by the Trump administration on March 25, 2025, these thresholds—based on the scale and capability of frontier models—serve as a proxy for identifying systems of geopolitical sensitivity. That same threshold logic can serve as a baseline for determining which AI models should be subject to the heightened cybersecurity requirements recommended by Nevo et al. (see above).

While export controls have dominated the United States’ AI strategy toward China, cybersecurity has received comparatively insufficient attention. Yet both areas equal focus. A predictable result of the current export control strategy is the increased incentive for Chinese state-backed actors to steal model weights. The primary bottleneck for Chinese AI companies is training, not inference. This limitation could be bypassed through the theft of weights from more powerful U.S. models. To mitigate this risk, the United States must rebalance its approach by strengthening cybersecurity defenses for frontier models. Strong cybersecurity is essential regardless of which actors—states, corporations, or individuals—may attempt to exploit vulnerabilities. Together with export controls, such protections will form the secure foundation necessary for responsible AI development, deployment, and global competitiveness. Because securing frontier AI model companies and their supply chains is inherently difficult, these efforts must begin now, before AI capabilities reach a point at which model weights become definitive national security assets.

It should go without saying that open weight model deployment would render many of these measures ineffective. Yet current export controls still include an exception for open-source models. Once AI models have national security implications, any effective U.S. policy must also address the open-sourcing of such powerful models.

Despite growing stringency, current cybersecurity measures in DoD procurement remain insufficient to protect frontier AI model weights from well-resourced, hostile actors—such as Chinese state-sponsored hackers. The National Security Memorandum’s expansion of cybersecurity efforts beyond traditional government-focused frameworks marks an important step toward securing the frontier AI supply chain. However, it remains a preliminary directive, focused primarily on research and risk identification, and its future under the new administration remains uncertain.

Next steps for the Trump administration: an executive order to update DoD procurement standards

To address this shortcoming, DoD procurement and PPP structures must evolve. Current regulations impose important protections but do not account for the full spectrum of attack vectors that a state-backed adversary might exploit in the future. Additionally, existing requirements may appear adequate for the status quo on paper, but the persistent gap between those standards and their implementation across the DoD contractor base raises serious doubts about their real-world effectiveness.

This implementation gap is not an argument against further tightening requirements. Rather, it underscores the need for clearer, enforceable, and purpose-built standards specifically designed to address the unique risks of frontier AI models. Without targeted measures tailored to model weight security, current frameworks will remain misaligned with the threats posed by state-backed adversaries.

Implementing this level of security within the DoD would require a new executive order or national security memorandum focused on DoD procurement. This order should formally recognize AI model weights as assets of national security relevance in the defense context and direct the DoD to establish classified-tier protections for their procurement, development, storage, and deployment.

The order could instruct the DoD, in coordination with the FAR Council, to propose tailored updates to DFARS addressing AI model weight security. A targeted rollout beginning with the most critical nodes in the DoD’s AI supply chain would be most effective. In parallel, the order could direct the National Institute of Standards and Technology (NIST) to develop a specialized security framework for frontier models used in defense, modeled on NIST SP 800-171 but adapted to the unique risks associated with model weights.

To enforce these protections, a DoD-specific certification regime drawing from the CMMC could be applied to contractors and cloud providers, establishing a tiered compliance framework with increasingly stringent requirements as model capabilities advance. Eligibility for defense contracts would require meeting the applicable tier of compliance. Oversight could be further supported through security audits conducted by the DoD and, where appropriate, by the Cybersecurity and Infrastructure Security Agency (CISA). Enforcement via the False Claims Act should be directed specifically against violations of certifications in place to secure model weights.

Challenges and concerns

While mandating stringent AI security measures through DoD procurement rules is a powerful tool, it has critical limitations. Frontier AI model developers who do not seek government or DoD contracts would fall outside this framework, meaning some of the most advanced models could remain exposed if their creators prioritize commercial deployment over defense-focused PPPs. This is particularly relevant given the high compliance costs and operational constraints that such regulations would impose, possibly deterring companies from engaging with defense projects altogether. Additionally, AI companies may be reluctant to submit to DoD or CISA oversight due to concerns over proprietary secrecy, innovation constraints, and geopolitical optics. The president could overcome such a challenge by compelling cooperation under the DPA.

Furthermore, the cost burden on the supply chain would be immense, potentially limiting competition and innovation, as only the largest firms could afford compliance. Finally, any overly rigid procurement security structure risks slowing down AI progress in national security contexts, where rapid iteration and deployment are often critical. Potential measures to balance the downsides could include increased government investment in cybersecurity measures in support of the supply chain and the model developers. Development of such measures in partnership with industry could also help alleviate the compliance burden, ensuring that the measures are cost-effective and scale reasonably. Without a carefully balanced approach, this strategy could create unintended bottlenecks while still leaving major vulnerabilities in the broader AI ecosystem.