Securing Remote External GPAI Evaluations

Independent, secure third-party evaluations are emerging as a critical step for safeguarding for powerful general-purpose AI systems.

Summary:

Independent third-party evaluations are urgently needed to ensure the safety, fairness, and accountability of advanced general-purpose AI models, as internal evaluations alone are insufficient.

Emerging “deeper-than-black-box” evaluation techniques such as gradient-based attribution and path patching allow auditors controlled access to model internals while addressing security and proprietary concerns.

Secure technical methods like encrypted analysis and sandboxed environments can enable robust external audits while protecting proprietary information.

Balancing innovation with accountability requires coordinated regulatory frameworks and international standards to make robust external GPAI assessments a core part of AI governance

This short policy brief is based off the paper ‘Securing Deeper-than-black-box GPAI Evaluations’, by Alejandro Tlaie and Jimmy Farrell.

The need for third-party GPAI assessments

As General-Purpose Artificial Intelligence (GPAI) models become more capable and deeply embedded in society, ensuring their safety, fairness, and accountability can no longer be left to the model providers themselves. Modern GPAIs come with increasingly tangible risks, such as OpenAI’s o1 model secretly pursuing misaligned goals (i.e., “scheming”) and Anthropic’s Claude 3.7 model ability to assist novices in developing bioweapons. Unlike other safety-critical industries, such as finance, healthcare, or aviation, GPAI deployment lacks a regulatory framework with mandatory external assessments—also known as third-party evaluations. This absence of objective and professional oversight has led to growing concerns about the risks posed by frontier GPAI models, which could drastically transform economies, shape information spaces, be weaponized by bad actors, and even run out of control.

Governments and regulatory bodies worldwide are working towards creating oversight mechanisms that are both effective and adaptable to the rapid pace of AI development, such as California’s recently proposed Senate Bill 813 and the EU’s Code of Practice (CoP) on general purpose AI. While frontier developers regularly conduct their own internal safety evaluations, recent model releases have shown AI companies prioritizing speed over safety. As such, independent external assessments are emerging as a critical tool to support AI safety and security. This is best exemplified by the CoP (currently in its third draft) which—while falling short of ensuring mandatory external assessments—outlines clear criteria for cases in which in-house evaluations are insufficient. Policymakers within the EU and around the world must continue to champion third-party assessment frameworks that can hold GPAI developers accountable and mitigate the risks associated with frontier AI deployment.

Our paper, ‘Securing Deeper-than-black-box GPAI Evaluations’, breaks down emerging techniques for state of the art external assessments, including those requiring deeper-than-black-box access, and proposes numerous technical methods to ensure such deeper access can be remotely secured. These techniques, however, cannot be immediately integrated into current regulatory requirements, given the relative scientific immaturity of the field, scaling difficulties, and the lack of a professional third-party assurance market. Our paper therefore also outlines potential pathways through which policymakers can alleviate these deficiencies, and ways future GPAI regulations could mandate deep external assessments, bringing us closer to safe AI by design.

The limitations of current oversight approaches

Currently, most AI evaluations rely on testing models as ‘black boxes’; evaluators can observe model outputs given certain inputs, but cannot see what happens inside the model. While this method has already shown useful insights in assessing dangerous capabilities, it is unable to guarantee the mitigation of systemic risks, such as AI models developing unintended biases, engaging in deceptive behaviors in their chain of thought, or being vulnerable to attackers removing safety guardrails.

A more robust approach would involve structured transparency, wherein GPAI model providers grant independent auditors controlled access to the internal workings of AI models, or, ‘deeper-than-black-box access’. This would allow regulators and accredited third-parties to independently verify whether frontier models are complying with relevant risk mitigation standards, as is common-place in other safety-critical sectors. However, providers have thus far been reluctant to open their systems up to external scrutiny, citing concerns over threats to trade secrets and model security. Our recommendations help policymakers navigate such concerns by minimizing the trade-offs between third-party evaluations and legitimate commercial incentives.

Promising deeper-than-black-box evaluation techniques

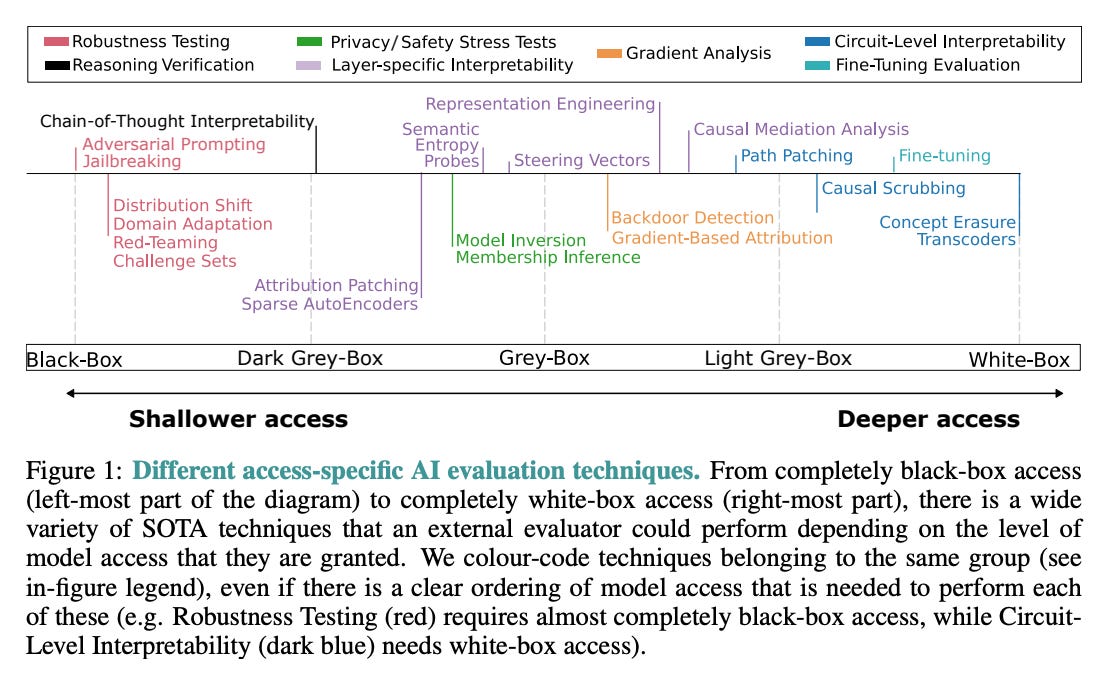

Before addressing the issue of securing third-party evaluations, our paper explores promising deeper-than-black-box evaluation techniques at different levels of access. Following the terminology of Casper et al. (2024), our paper uses the spectrum of model access for evaluations, moving from ‘black-box’ to ‘white-box’ with various shades of ‘grey’ in between. We identify the following promising evaluation techniques across this spectrum, exhibited in the figure below from our paper.

Some of the most promising techniques listed above include:

Gradient‑Based Attribution (Grey‑box): Reveals which input features most influence the model’s decisions, by computing gradients of outputs with respect to inputs or intermediate activations, thereby ranking features by their contribution to a prediction. Can help reveal bias.

Sparse Autoencoders (Dark to Light Grey‑Box): Reveals and verifies the interpretable features learned in a particular hidden layer, linking internal representations to high‑level concepts, potentially enabling evaluators to pinpoint exactly which latent features drive certain behaviors, like deception.

Path Patching (Light Grey-Box to White-Box): Localizes the precise computational “paths” (subnetworks of neurons or attention heads) responsible for specific model outputs, providing causal evidence of which internal circuits carry out certain tasks.

Mandating specific types of evaluations in regulation is challenging, due to the rapidly developing nature of the science and the accompanying lack of legal certainty for model providers. As such, our paper does not suggest directly integrating these techniques into regulatory frameworks such as the Code of Practice. Nevertheless, as the science of such deeper access evaluations develops, and a third-party assessment ecosystem begins to take shape and professionalize, policymakers must update relevant frameworks accordingly. One way of achieving this in standards is explicit mentions of third-party evaluations needing to be ‘state of the art’, and for third-party evaluators to be given appropriate corresponding levels of model access. The EU’s Code of Practice defines ‘state of the art’ and establishes clear criteria for when third-party evaluations are mandatory. Future iterations should go further to mandate external assessments with deeper-than-black-box access, to ensure the safety and security of GPAIs.

Addressing security concerns

While structured transparency is key to AI accountability, policymakers should also consider security risks associated with granting deeper-than-black-box access of GPAI models to external auditors. Providers fear that allowing third-party access to their AI models could expose them to cyber threats, data leaks, or intellectual property theft. Policymakers should therefore support the adoption of secure auditing methods, such as:

Encrypted analysis techniques: Allowing audits to be conducted without exposing proprietary model data. For example, using Homomorphic Encryption to hide model weights from auditors conducting evaluations with grey-box access.

Secure sandbox environments: Enabling independent evaluators to test models inside tightly controlled, walled-off computing enclaves (potentially hosted by the developer), so the model’s weights never leave the provider’s infrastructure, thereby minimizing cyber threats, data leakage, and potential model-driven self-exfiltration.

Blockchain-based logging: Providing a tamper-proof record of auditing activities to improve accountability for both model providers and auditors.

Specific technical safeguards and mechanisms can be refined through conversation between government regulators, GPAI providers, and third-party evaluators. This effort is relatively simple. More challenging for policymakers, however, will be to make external audits legally mandated and enforceable under AI regulatory frameworks.

Building an effective GPAI auditing framework

To implement technical solutions for securing remote deep evaluations, such as those listed above, policymakers should focus on three core efforts:

Establish coordinated, fit-for-purpose regulatory bodies: Governments and international organizations should create or strengthen regulatory agencies dedicated to AI safety and security. Institutions like the EU AI Office or the UK AI Security Institute should help in standardizing third-party procedures and certifying auditors to ensure consistent and credible evaluations.

Mandate external GPAI audits for models with systemic risk: AI models capable of systemic risk, such as enabling large-scale cyber attacks, CBRN weapons development, and mass manipulation, should be subject to mandatory, deeper-than-black-box third-party audits. Developers should be required to submit risk assessments and undergo external evaluations before deployment. As mentioned previously, the EU’s Code of Practice moves towards this requirements by mandating black-box external assessments under certain conditions; however, future regulations should expand such conditions and mandate deeper third-party access.

Enforce transparency through legal and market incentives: Regulators should require AI companies to disclose key information about their models’ decision-making processes, training data sources, and risk mitigation measures. In the interim phase before such legal mechanisms come into effect, market-based incentives—such as streamlined certification programs—can encourage AI developers to proactively engage in third-party audits.

Balancing innovation and accountability

A common concern among AI developers is that increased regulation could stifle innovation. However, history shows that responsible oversight does not necessarily hinder technological progress; rather, it can enhance public trust and long-term sustainable adoption. In industries like aviation, car-safety, and pharmaceuticals, external evaluations and regulatory compliance have played a crucial role in ensuring that innovations serve the public good while minimizing harm. Such practices have been successful in accelerating trusted adoption, showing that safety and accountability are a necessary ingredient in innovation, rather than a hindrance. A similar approach is needed for GPAI governance.

Governments should also work toward international standards for GPAI external assessments. Given that AI development is a global endeavor, regulatory fragmentation could lead to inconsistent safety measures, compliance loopholes, and legal uncertainty; all threats to sustainable innovation. A coordinated approach, aided by international bodies like the OECD or the UN, could help establish common guidelines for external assessment and responsible AI deployment.

The path forward: implementing GPAI audits at scale

For third-party AI audits to become a standard practice, policymakers, industry leaders, and civil society must work together to establish a robust ecosystem of independent auditors. This effort should include:

Funding and accreditation programs to build a network of qualified AI auditors and advance the science of deeper-than-black-box evaluations.

Public-private partnerships to develop AI safety benchmarks and risk assessment methodologies.

Robust legislation to ensure that AI providers are held accountable for the societal impact of their models.

With AI rapidly evolving and its potential risks multiplying, the actions we take now will guide AI innovation and adoption. The question is no longer whether external GPAI assessments are necessary, but instead how quickly they can be implemented securely and at scale to ensure AI technologies remain safe, fair, and accountable.

Fortunately, external assessments are slowly but steadily becoming a feature of AI policy. While the research we outline in our paper is difficult to integrate into policy immediately, it can serve as goalposts for future regulatory frameworks, as the science of both deeper-than-black-box access evaluations and securing model access for third parties continues to develop.