AI Companies’ Safety Research Leaves Important Gaps. Governments and Philanthropists Should Fill Them.

Many of the leading AGI companies – particularly OpenAI, Google DeepMind and Anthropic – say they're worried they won't be able to control future AI systems. All three companies have responded to this concern by performing technical research to reduce hazards posed by future AI systems – sometimes referred to as ‘AI safety’ research. How are they going about this research, and what may they be missing?

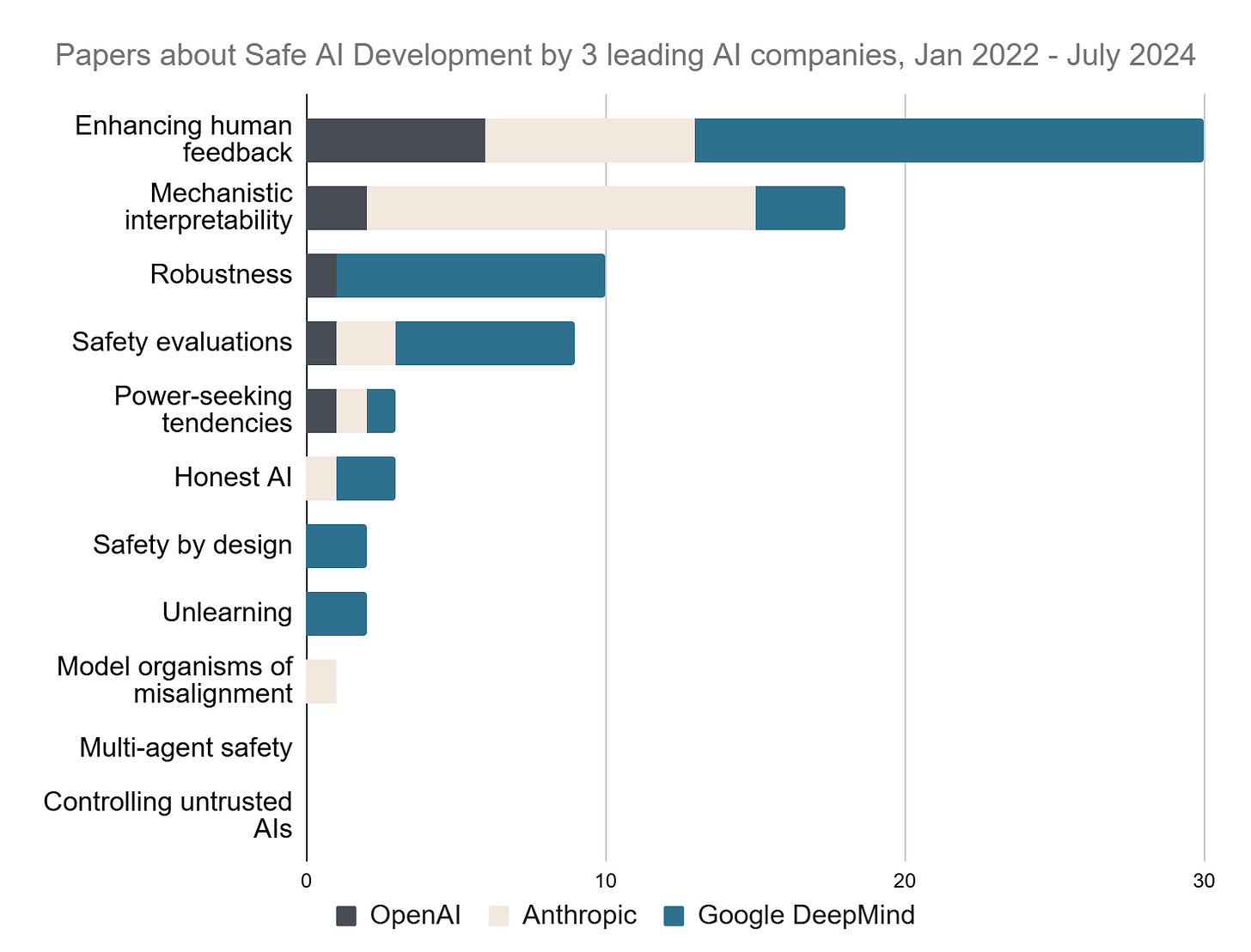

Our recent analysis shows that their efforts are concentrated in just a few main areas. Of the 78 AI safety papers published by these three companies between January 2022 and July 2024, 62% were in the ‘enhancing human feedback’ or ‘mechanistic interpretability’ categories. The other nine areas in our taxonomy of technical safety approaches received less attention from the companies. The bottom five categories averaged just one paper each.

Published papers provide a window into companies' safety research priorities, though they tell only part of the story. Some research remains unpublished for good reasons – such as to protect trade secrets or prevent malicious actors from exploiting knowledge of safety measures to evade them.

Enhancing human feedback and mechanistic interpretability are the areas best covered by leading AGI companies

Enhancing human feedback. This key AI safety approach involves human evaluators reviewing language model outputs across various contexts. The evaluator rates the desirability of the model’s responses based on principles like being helpful, honest, and harmless. This approach, known as ‘reinforcement learning from human feedback’ (RLHF), was pioneered in 2017 by researchers fromOpenAI and Google, and is widely used to make chatbots such as ChatGPT behave like helpful assistants.

Without RLHF, language models simply predict the next most likely text based on their training data, potentially producing nonsensical responses. For instance, a model might respond to 'What is the capital of Peru?' with 'What is the capital of Ecuador?', if it had encountered sequences of such questions during training. Developers can also use RLHF to make chatbots refuse to answer certain questions. For example, if the user asks the model to generate a recipe for a bioweapon, it’s clearly sensible to train the model to refuse such prompts. Other cases are more debated, such as whether chatbots should be able to produce opinions that others might find offensive1

In our analysis, 38% of AI safety papers from OpenAI, Google, and Anthropic were in the ‘enhancing human feedback’ category. This focuses on extending RLHF by developing better ways to convert data on human preferences into safe and aligned AI systems, particularly as the AI systems in question become more capable. It makes sense that enhancing human feedback is a major focus of companies’ safety research; it aligns well with profit incentives. An unhelpful chatbot that just responds to questions with more questions would not be a bestseller.

Mechanistic interpretability is the second most common topic of corporate AI safety research, comprising 23% of papers. Think of it as “neuroscience for AI,” peering inside the otherwise inscrutable workings of AI models to understand how they process information and make decisions.

Anthropic has led the way in mechanistic interpretability, including releasing a gimmick version of Claude in which researchers had identified the part of the language model representing the Golden Gate Bridge, and turned that dial way up. Whatever question was asked, this version of Claude would weave the bridge into its answer, or even respond as a personification of the bridge.

More seriously, a better understanding of the inner workings of language models could allow AI companies to determine what neural network pathways are in use when AI models lie (perhaps through observing what happens when the model is explicitly instructed to do so). If these pathways are activated unexpectedly, for instance when we ask an AI about its motivations or beliefs, this could trigger an alarm that the AI may be responding deceptively. It might also become possible to do ‘AI neurosurgery’ to remove the ability of AI systems to be deliberately deceptive.

Safety research published by OpenAI, Google, and Anthropic concentrates on just a few approaches

Of the papers we analyzed, ‘robustness’ and ‘safety evaluations’ comprised 13% and 12%, respectively. Robustness research aims to improve performance and reduce unpredictability when the AI system is exposed to unusual situations. Safety evaluations measure potentially dangerous properties of AI systems, such as whether they know how to make biological weapons. There were few or no papers in our dataset for the remaining seven categories.

The role of governments and philanthropists

What should we make of the fact that OpenAI, Google, and Anthropic, at least in their papers, focus so strongly on enhancing human feedback and mechanistic interpretability?

This focus likely results, in part, from AI companies identifying these areas as the most tractable. AI companies want to demonstrate that they care about safety without spending resources on unpromising research directions. The fact that many people in these companies have been expressing concerns about safety for years – well before AI became mainstream – suggests that their stated concerns are at least somewhat sincere.

However, AI companies’ economic incentives are not perfectly aligned with the public interest. For example, companies might be disproportionately likely to pursue research that quickly improves their products, beyond just making them safer. RLHF is an example of this, often making systems more helpful without resolving deeper safety concerns. AI companies will also tend to favour safety work that is more visible and salient to external observers, to bolster their reputation as safety-oriented. Moreover, companies whose success is largely built on scaling and publicly deploying large models might be wary of safety approaches that challenge this paradigm. Indeed, a string of people resigning from safety teams at OpenAI last year said they do not trust the company to prioritize careful safety processes above boosting profits by shipping more features.

All of this suggests that governments and philanthropists have a crucial role in the AI safety funding landscape.

Governments can reshape AI companies’ incentives to nudge them to pursue certain kinds of safety research. For example, governments might want to incentivize AI companies to do more work on safety evaluations. If so, governments could write rules requiring high-quality evaluations before a system can be deployed in specific contexts, or use their procurement power by only purchasing systems backed by such evaluations.

Moreover, both governments and philanthropists could fund safety research that AI companies, for various reasons, are not prioritizing2

Safety approaches for governments and philanthropists to pursue

Several AI safety approaches that are presently neglected by leading AI companies are promising candidates for government and philanthropic funding:

Safety evaluations measure whether AI systems have concerning properties, such as knowledge about producing bioweapons, or a tendency to behave in ways that the developer does not intend. Even though AI companies can and do perform safety evaluations and tests on their own models, it is more credible and trustworthy for a third-party evaluator without conflicts of interest to do this work. Indeed, AI companies have submitted their pre-release models for safety testing by nonprofits like METR and Apollo Research, and government bodies like the UK and US AI Safety Institutes. It will be key for these third-party evaluators to remain intellectually independent of the AI companies whose models they evaluate, rather than accepting companies’ money to rubber-stamp models as ‘safe’ despite weak testing. This issue was exemplified by financial rating agencies becoming too closely tied to the banks they were evaluating in the lead-up to the 2008 financial crisis.

AI control. External researchers can also contribute by pioneering novel safety methodologies that AI companies can then incorporate if initial results are promising. ‘AI control’ is one such approach from Redwood Research. It focuses on using oversight mechanisms to prevent even misaligned AI systems from being able to misbehave catastrophically. While there were no ‘AI control’ papers in our dataset, companies are likely to act on promising results. This is analogous to how in biomedical research, initial breakthroughs are often made in academia or at startups, and large pharmaceutical companies then refine the ideas and deploy products at scale.

New AI paradigms. External researchers can additionally play a valuable role by doing ambitious blue-sky work that companies focused on rapid results and monetizability are less likely to pursue. For example, the UK government’s Safeguarded AI program seeks to pioneer radically new ways to develop AI systems that can be mathematically proven to have certain desirable safety features. Safeguarded AI is providing tens of millions of pounds over several years to mainly academic and nonprofit researchers to implement ambitious AI safety projects. Only 3% of the papers in our dataset were in this ‘safety by design’ category. The existing big AI companies might be particularly incentivized to avoid safety research involving different AI paradigms; these companies are winning in the competition to develop AI systems in the current paradigm and might not want to jeopardize their position.

Ways forward for governments and philanthropists

AI companies do valuable work towards making their products safe. However, there are gaps in their research focuses, which governments and philanthropists can help to fill. With mechanisms like procurement rules, governments can incentivize companies to do more – and different kinds of – AI safety research. And with funding, governments and philanthropists can promote promising research approaches that companies are neglecting.

Thank you to Alex Petropoulos, Jamie Bernardi, Renan Araujo, and Zoe Williams for helpful comments on drafts of this piece, to Kimya Ness and Anine Andresen for copy-editing, and to our employer, the Institute for AI Policy and Strategy, for supporting this work.

In any case, users can sometimes use ‘jailbreaks’, disguising their question as something innocuous, to circumvent restrictions.

Governments could consider making AI companies fund this research, such as with taxes on deploying high-risk models, or fees to evaluate the safety of high-risk models. This would avoid taxpayers paying for a risk that is created by AI companies in general.

Great work, thanks for sharing!

I was recently thinking about capability restrictions, similar to the Unlearning paradigm, but going beyond specific dangerous domains. I thought it might become desireable at some future point to restrict the reasoning capabilities of AI systems more generally in order to make them less able to behave in unintended and catastrophic ways. This plausibly falls more into the scope of technical AI governance topics, but I suppose this would also require some further technical safety research to be implementable.

Are safety evaluations neglected by frontier labs or is it just that it tends to be released as a model card rather than as a paper?